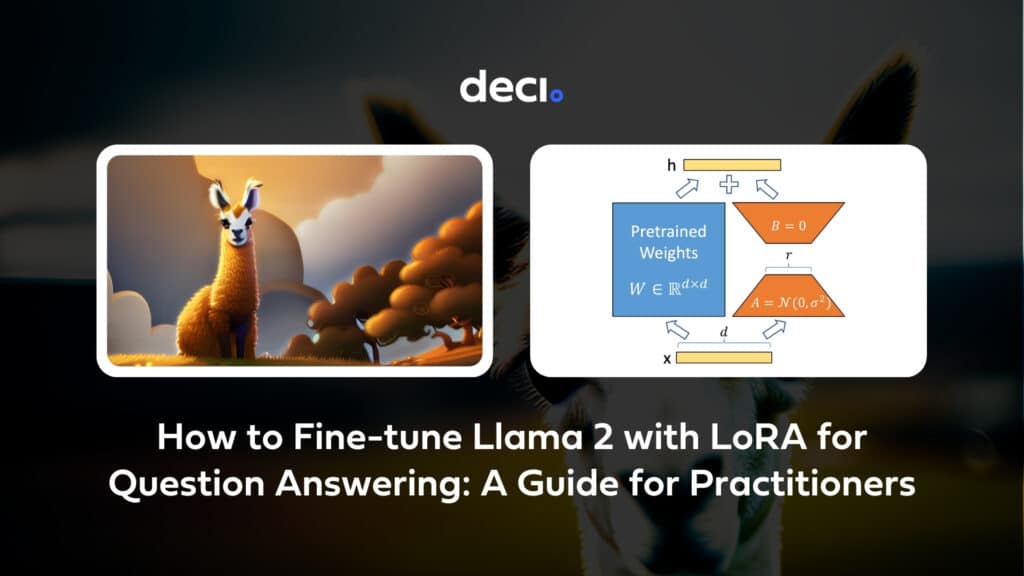

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide for Practitioners

4.6 (523) In stock

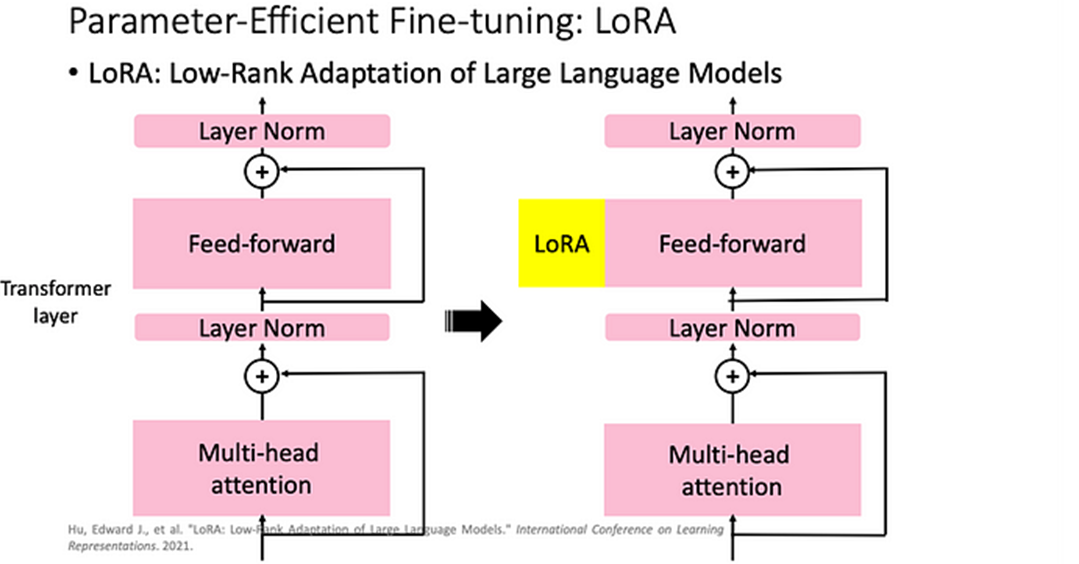

Learn how to fine-tune Llama 2 with LoRA (Low Rank Adaptation) for question answering. This guide will walk you through prerequisites and environment setup, setting up the model and tokenizer, and quantization configuration.

Fine-tuning Llama 2: An overview

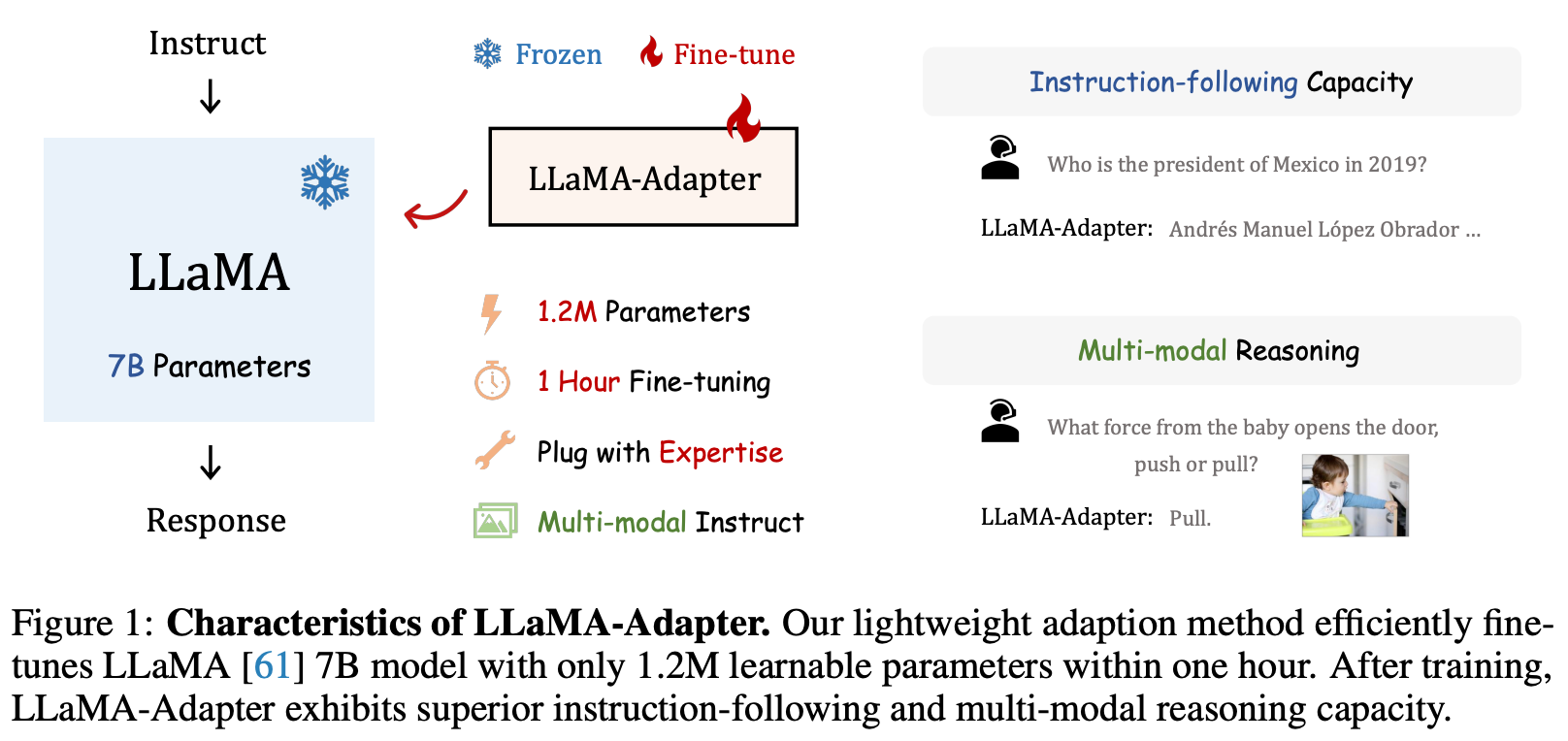

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

Abhishek Mungoli on LinkedIn: LLAMA-2 Open-Source LLM: Custom Fine

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide

Fine-Tuning Llama-2 LLM on Google Colab: A Step-by-Step Guide

Alham Fikri Aji on LinkedIn: Back to ITB after 10 years! My last visit was as a student participating…

FINE-TUNING LLAMA 2: DOMAIN ADAPTATION OF A PRE-TRAINED MODEL

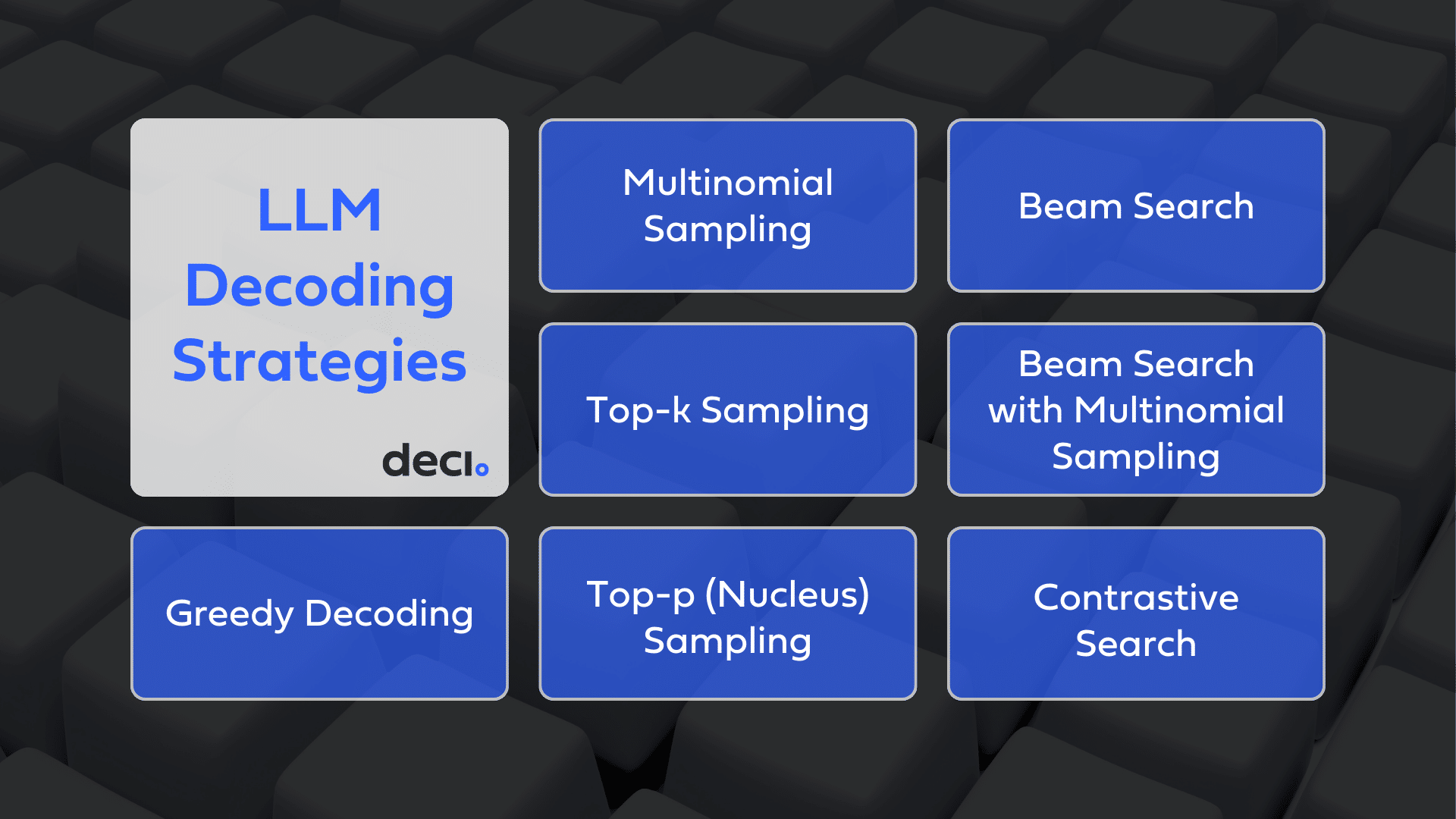

Low Rank Adaptation: A Technical deep dive

Fine-Tuning Transformers for NLP

What is fine tuning in NLP? - Addepto

Fine-Tuning Your Own Llama 2 Model

RAG Vs Fine-Tuning Vs Both: A Guide For Optimizing LLM Performance - Galileo

LacyLuxe Seamless Padded Bra Women T-Shirt Lightly Padded Bra – Eves Beauty

LacyLuxe Seamless Padded Bra Women T-Shirt Lightly Padded Bra – Eves Beauty NWT CALIA Carrie Underwood Essential V Front Capri + Move Tank SET

NWT CALIA Carrie Underwood Essential V Front Capri + Move Tank SET Buy PROYOG Unisex Yoga Dhoti Easy Pants Organic Cotton Bamboo I Tula Raspberry S at

Buy PROYOG Unisex Yoga Dhoti Easy Pants Organic Cotton Bamboo I Tula Raspberry S at 5 Key things to know about fire resistant clothing

5 Key things to know about fire resistant clothing FY24 Valentine's Day New Year Deals! Itsun Women's Pantie,Women's Cotton Underwear High Waist Stretch Briefs Soft Underpants Ladies Coverage Panties 5 Pack Multicolor 6(L)

FY24 Valentine's Day New Year Deals! Itsun Women's Pantie,Women's Cotton Underwear High Waist Stretch Briefs Soft Underpants Ladies Coverage Panties 5 Pack Multicolor 6(L) Plus Size Women Full Body Corset Belly Slimming Shapewear Butt Lifitng Body Shaper Waist Trainer Korset Kurus Wanita Ready Stock 971146

Plus Size Women Full Body Corset Belly Slimming Shapewear Butt Lifitng Body Shaper Waist Trainer Korset Kurus Wanita Ready Stock 971146