How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase

5 (706) In stock

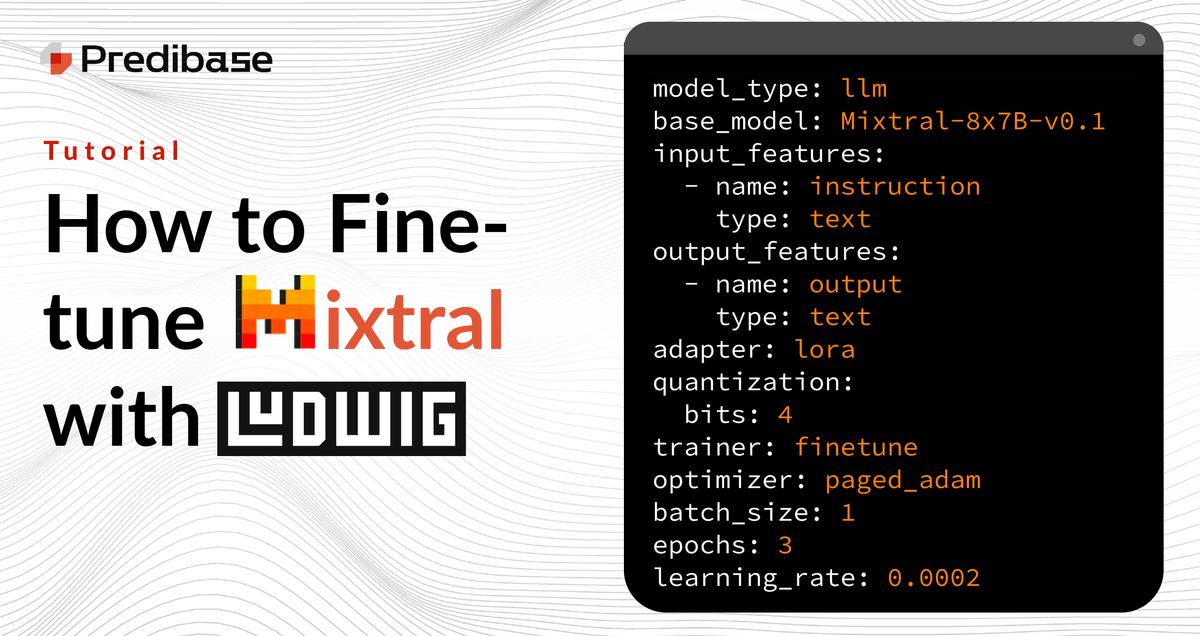

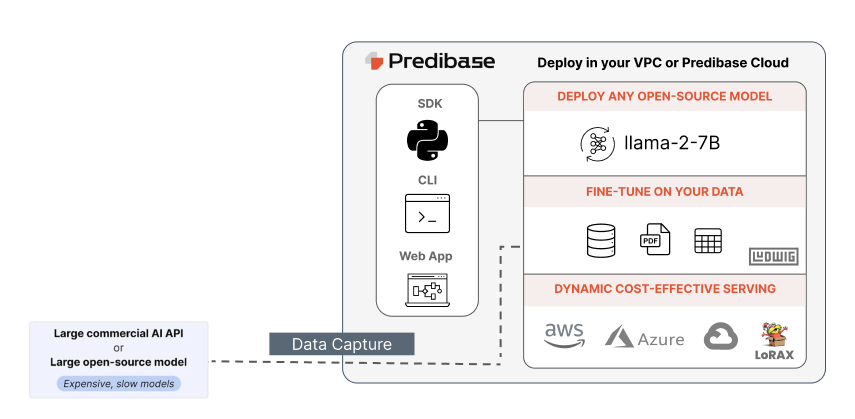

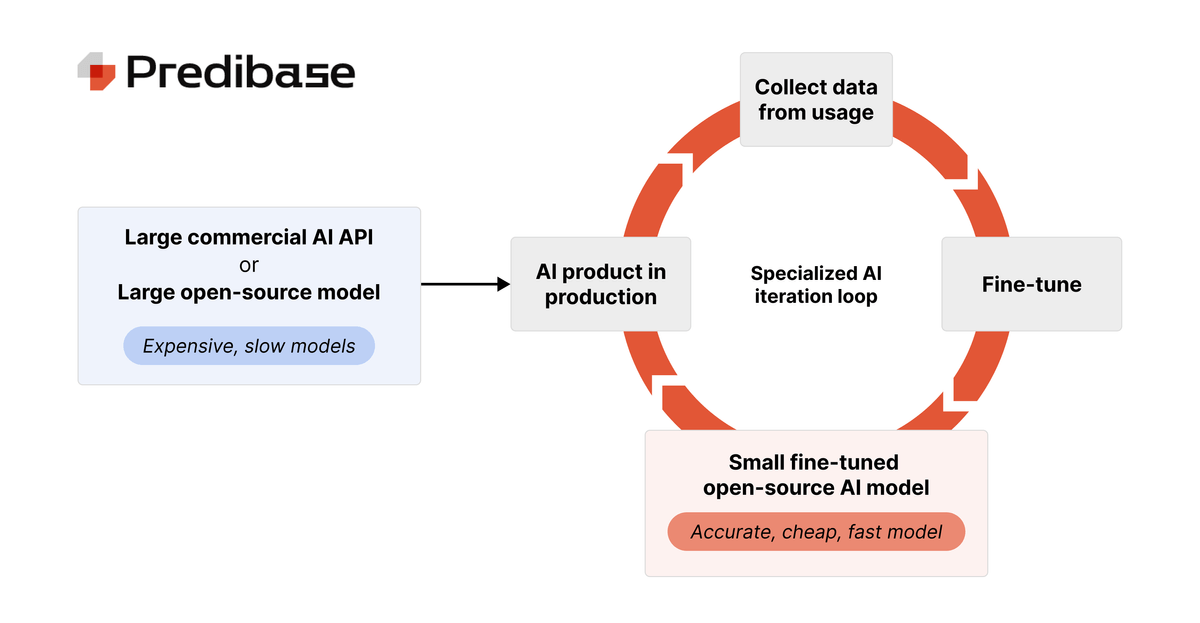

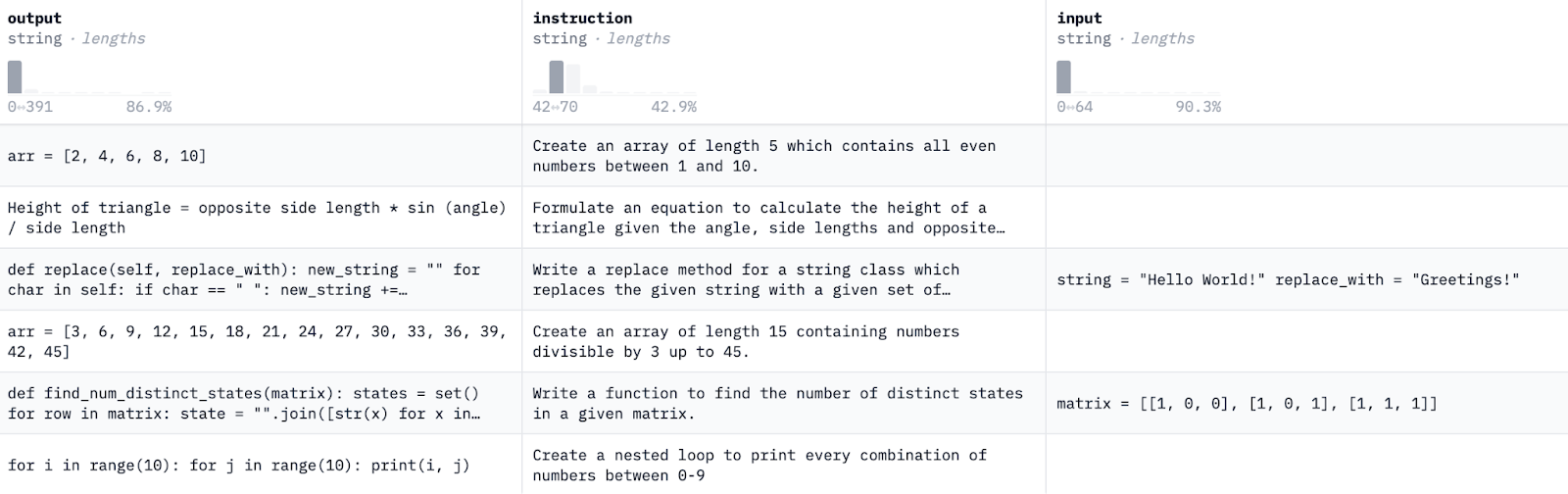

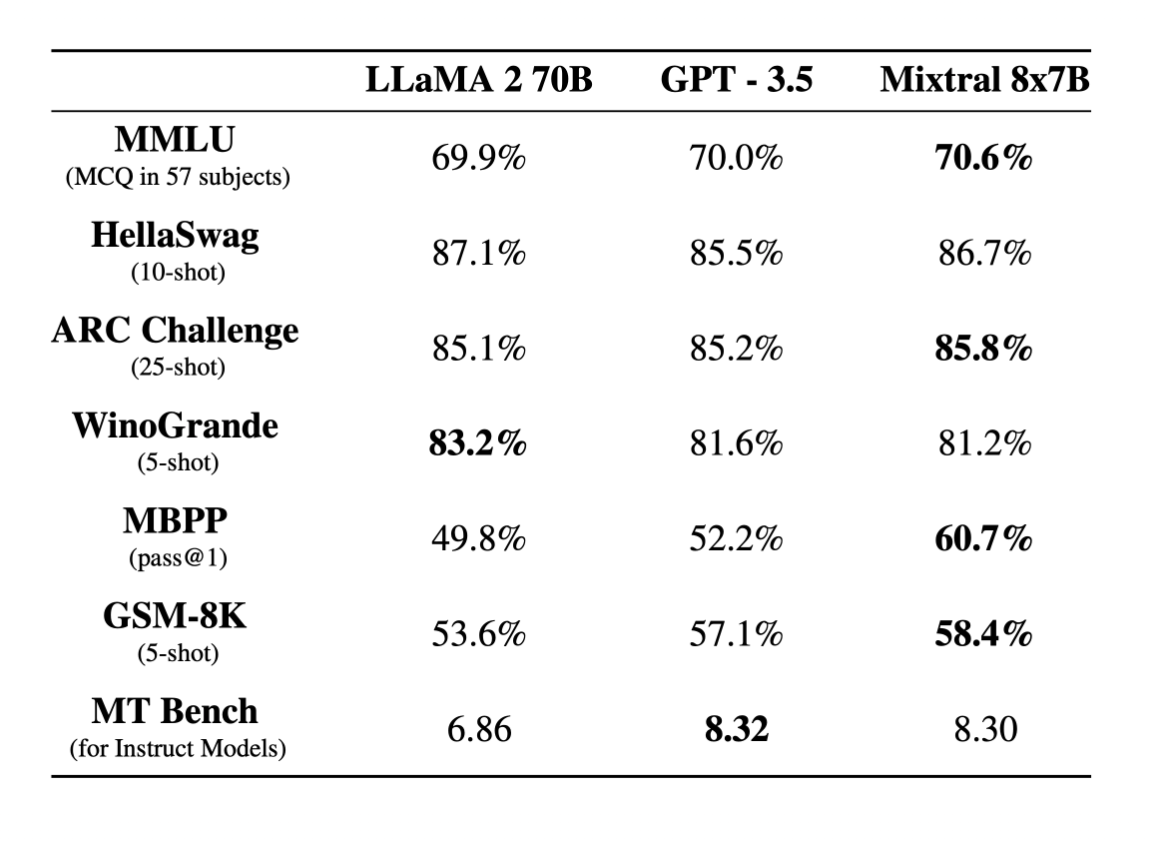

Learn how to reliably and efficiently fine-tune Mixtral 8x7B on commodity hardware in just a few lines of code with Ludwig, the open-source framework for building custom LLMs. This short tutorial provides code snippets to help get you started.

Little Bear Labs on LinkedIn: LoRAX: The Open Source Framework for

Graduate from OpenAI to Open-Source: 12 best practices for

Devvret Rishi on LinkedIn: Introducing the first purely serverless

Geoffrey Angus (@GeoffreyAngus) / X

How to know about LLMs: A Guide Predibase posted on the topic

How to fine-tune Mixtral-8x7B-Instruct on your own data?

Graduate from OpenAI to Open-Source: 12 best practices for

Train Finetune and Deploy any ML model Easily

images.ctfassets.net/ft0odixqevnv/6Mf2fqGULXxUCgeG

images.ctfassets.net/ft0odixqevnv/6AmBvFhoy2X2Lnha

Feature-based Transfer Learning vs Fine Tuning?

Full Fine-Tuning, PEFT, Prompt Engineering, or RAG?

RAG Vs Fine-Tuning Vs Both: A Guide For Optimizing LLM Performance

Fine tuning Meta's LLaMA 2 on Lambda GPU Cloud

Fine Tune Expense Management - Certified B Corporation - B Lab Global

Delta Air Lines celebrates 70 years of service in Puerto Rico

Delta Air Lines celebrates 70 years of service in Puerto Rico Cut out Sports Bra- Lilac – Refuge Boutique

Cut out Sports Bra- Lilac – Refuge Boutique Nucarture Reusable Waterproof underarm sweat pads for women

Nucarture Reusable Waterproof underarm sweat pads for women- Elomi Women's Cate Side Support Wire-Free Bra - EL4033 44G Black

free leggings with skirt attached pattern and tutorial from Life

free leggings with skirt attached pattern and tutorial from Life Weightlifting Belt - Waseem Impex

Weightlifting Belt - Waseem Impex