DeepSpeed Compression: A composable library for extreme

4.6 (452) In stock

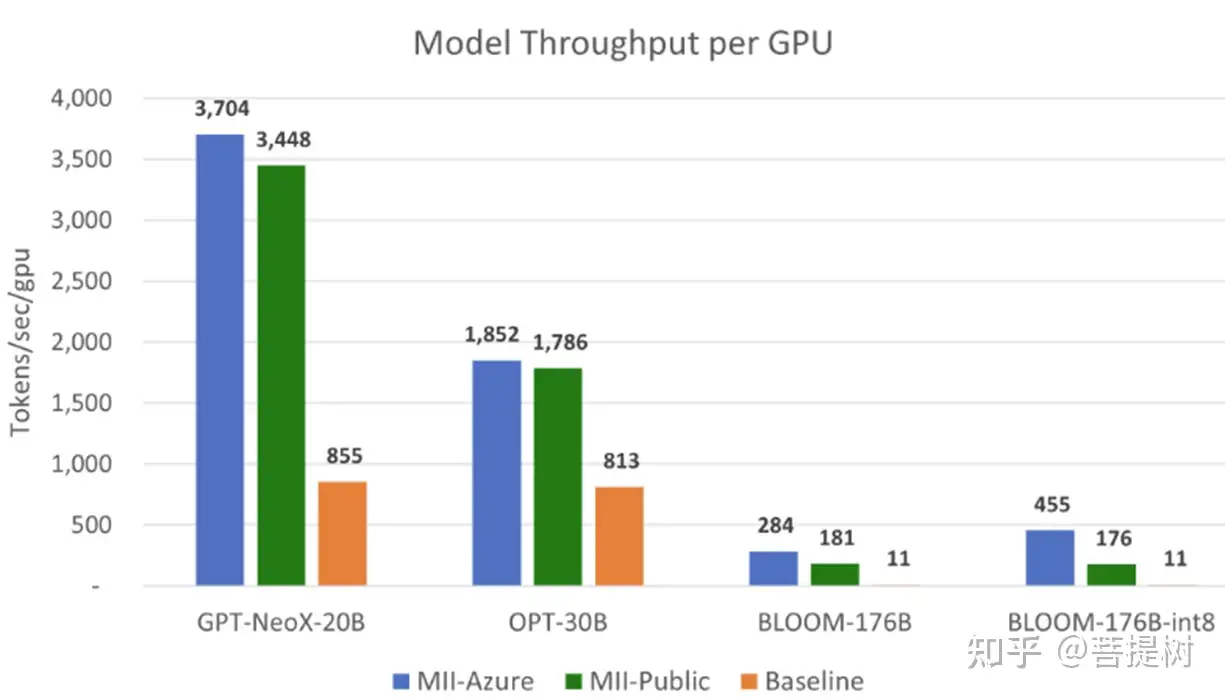

Large-scale models are revolutionizing deep learning and AI research, driving major improvements in language understanding, generating creative texts, multi-lingual translation and many more. But despite their remarkable capabilities, the models’ large size creates latency and cost constraints that hinder the deployment of applications on top of them. In particular, increased inference time and memory consumption […]

GitHub - microsoft/DeepSpeed: DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

OpenVINO™ Blog Category Page: Model Compression

Microsoft AI Releases 'DeepSpeed Compression': A Python-based Composable Library for Extreme Compression and Zero-Cost Quantization to Make Deep Learning Model Size Smaller and Inference Speed Faster - MarkTechPost

This AI newsletter is all you need #6

ZeroQuant与SmoothQuant量化总结-CSDN博客

DeepSpeed介绍- 知乎

PDF) DeepSpeed Data Efficiency: Improving Deep Learning Model Quality and Training Efficiency via Efficient Data Sampling and Routing

This AI newsletter is all you need #6, by Towards AI Editorial Team

Michel LAPLANE (@MichelLAPLANE) / X

DeepSpeed download

Dynamic Air Compression for Athletic Recovery

Airback The backpack with Built-in Compression Tech by Airback

GLU-TECH INNER TIGHTS (MEN) - BLACK

Torr FoodTech raises $12m to form snack bars using compression and

Leopard Print Fashion High Elastic Fitness Pants High - Temu

Leopard Print Fashion High Elastic Fitness Pants High - Temu InstantRecoveryMD Compression Bra W/T-Back & Front Zip Hook-N-Eye Fron – InstantFigure INC

InstantRecoveryMD Compression Bra W/T-Back & Front Zip Hook-N-Eye Fron – InstantFigure INC Fit hot woman taking off swimsuit panties Stock Photo

Fit hot woman taking off swimsuit panties Stock Photo Posh Peanut Valerie Footie Ruffled Zippered One Piece

Posh Peanut Valerie Footie Ruffled Zippered One Piece Corset for Barbie and Other Fashion Dolls

Corset for Barbie and Other Fashion Dolls Under Armour Mens UA Storm Half Zip 4-Way Stretch Golf Sweater

Under Armour Mens UA Storm Half Zip 4-Way Stretch Golf Sweater