Iclr2020: Compression based bound for non-compressed network

4.5 (455) In stock

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network - Download as a PDF or view online for free

1) The document presents a new compression-based bound for analyzing the generalization error of large deep neural networks, even when the networks are not explicitly compressed.

2) It shows that if a trained network's weights and covariance matrices exhibit low-rank properties, then the network has a small intrinsic dimensionality and can be efficiently compressed.

3) This allows deriving a tighter generalization bound than existing approaches, providing insight into why overparameterized networks generalize well despite having more parameters than training examples.

ICML 2022

Meta Learning Low Rank Covariance Factors for Energy-Based Deterministic Uncertainty

ICLR 2020

Publications - OATML

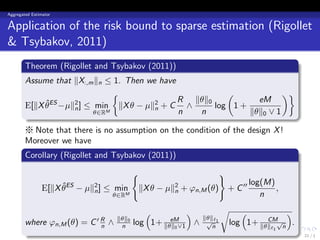

PAC-Bayesian Bound for Gaussian Process Regression and Multiple Kernel Additive Model

On the Resilience of Deep Learning for reduced-voltage FPGAs

Higher Order Fused Regularization for Supervised Learning with Grouped Parameters

Peter Richtarik

PAC-Bayesian Bound for Gaussian Process Regression and Multiple Kernel Additive Model

Non-Slip Compression Adjustable Hip Brace – Avibaba USA

Whitecap Non-Locking Hatch Compression Latch - S-0235C

Solved (5%) Problem 12: The force required to compress a