Dataset too large to import. How can I import certain amount of rows every x hours? - Question & Answer - QuickSight Community

5 (398) In stock

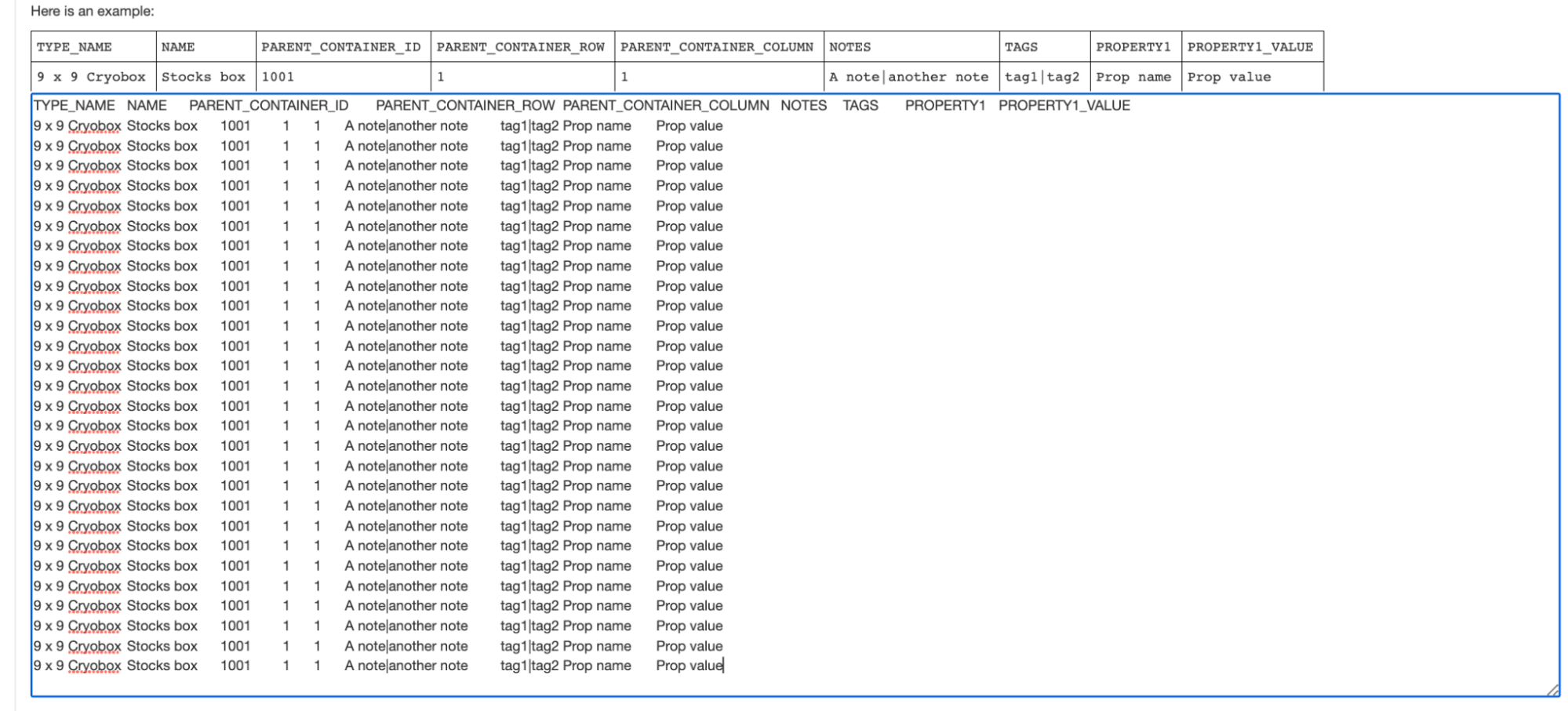

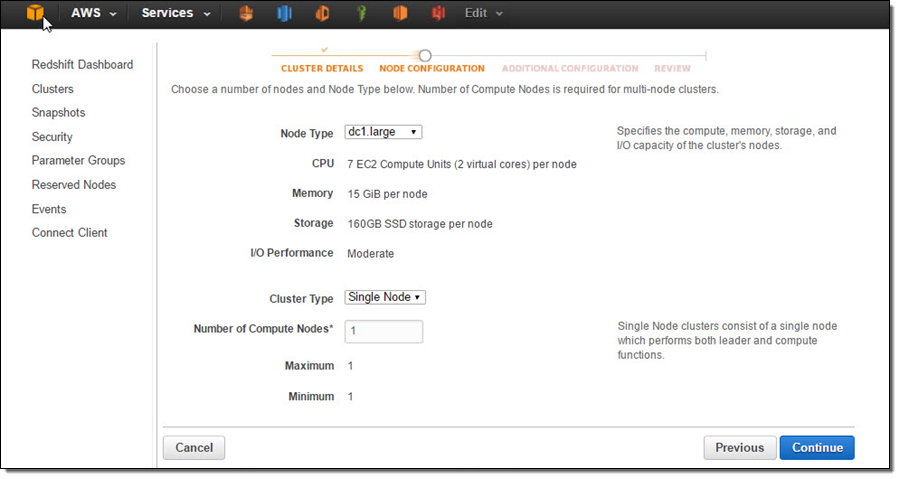

Im trying to load data from a redshift cluster but the import fails because the dataset is too large to be imported using SPICE. (Figure 1) How can I import…for example…300k rows every hour so that I can slowly build up the dataset to the full dataset? Maybe doing an incremental refresh is the solution? The problem is I don’t understand what the “Window size” configuration means. Do i put 300000 in this field (Figure 2)?

Page 5 – Ginkgo Bioworks

Premium Incremental Refresh Detect data changes (H - Microsoft Fabric Community

AWS Quicksight vs. Tableau -Which is The Best BI Tool For You?

Datastage

QuickSight

AWS DAS-C01 Practice Exam Questions - Tutorials Dojo

How to open a very large Excel file - Quora

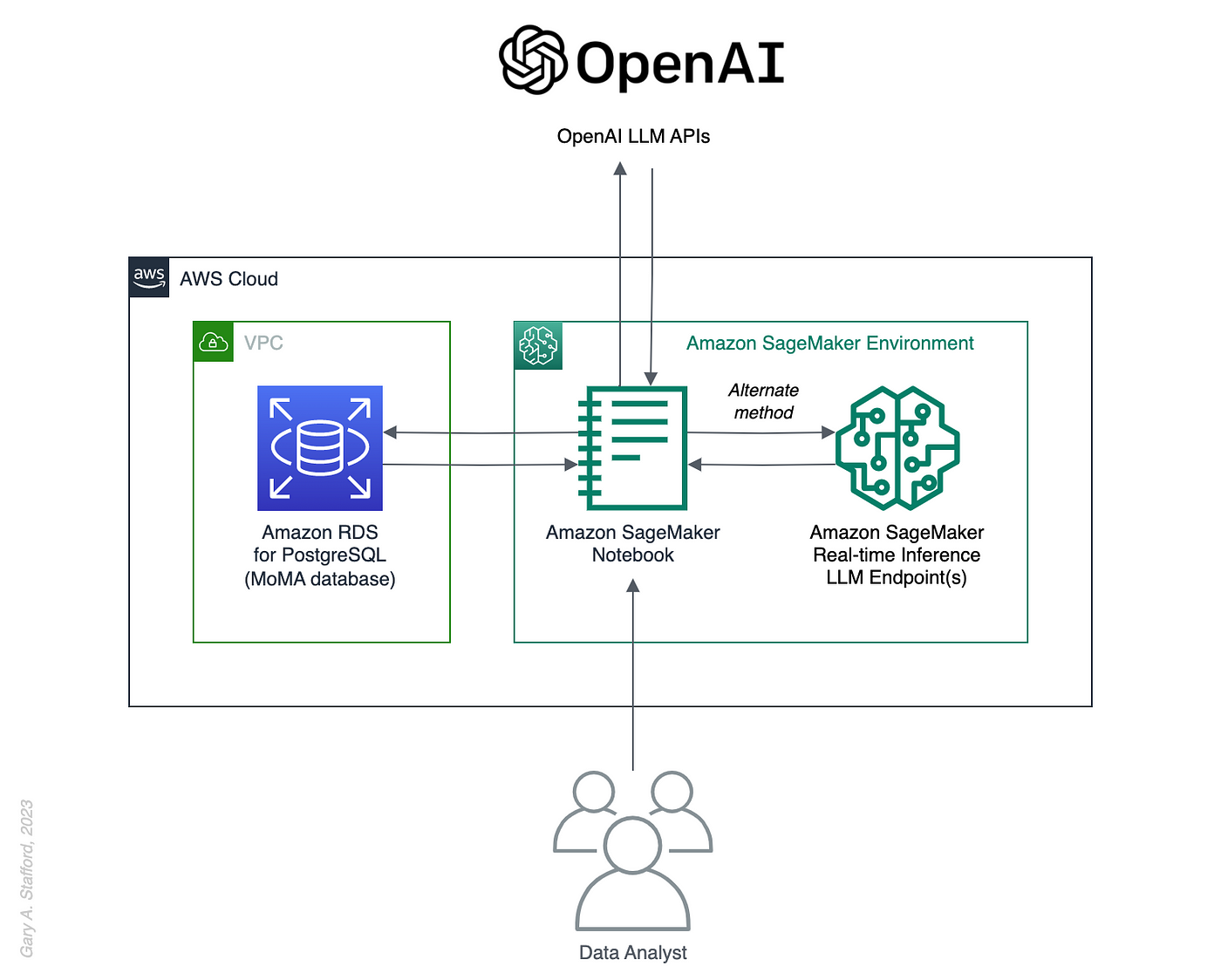

Generative AI for Analytics: Performing Natural Language Queries on RDS using SageMaker, LangChain, and LLMs, by Gary A. Stafford

Changing date field granularity - QuickSight

Quicksight: Deep Dive –

If you make your text size too big You're gonna have a bad time

How to Fit Your Flange – bemybreastfriend, LLC

Introspection - Know Yourself Better (Self Awareness)

The Scented Market Inc. on X: Be proud of the person you've

ImFlattered: Women fight back against unsolicited fashion advice

Crz Yoga Brown Short Sleeve Top Size M - 59% off

Crz Yoga Brown Short Sleeve Top Size M - 59% off Sutemribor M2.5 Male Female Hex Brass Spacer Standoff Screw Nut Assortment Kit (180Pcs)

Sutemribor M2.5 Male Female Hex Brass Spacer Standoff Screw Nut Assortment Kit (180Pcs) Columbia Unisex Buga II Suit, Black, Grill, Large : : Clothing, Shoes & Accessories

Columbia Unisex Buga II Suit, Black, Grill, Large : : Clothing, Shoes & Accessories Wide Back Strap Bra

Wide Back Strap Bra Gilligan & O'Malley Bra Size 38DD — Family Tree Resale 1

Gilligan & O'Malley Bra Size 38DD — Family Tree Resale 1 Color Me Pajama™ Two Piece Bamboo in Floral Flutter

Color Me Pajama™ Two Piece Bamboo in Floral Flutter