DistributedDataParallel non-floating point dtype parameter with

4.7 (647) In stock

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

distributed data parallel, gloo backend works, but nccl deadlock · Issue #17745 · pytorch/pytorch · GitHub

Aman's AI Journal • Primers • Model Compression

DistributedDataParallel non-floating point dtype parameter with requires_grad=False · Issue #32018 · pytorch/pytorch · GitHub

torch.nn、(一)_51CTO博客_torch.nn

BUG] No module named 'torch._six' · Issue #2845 · microsoft/DeepSpeed · GitHub

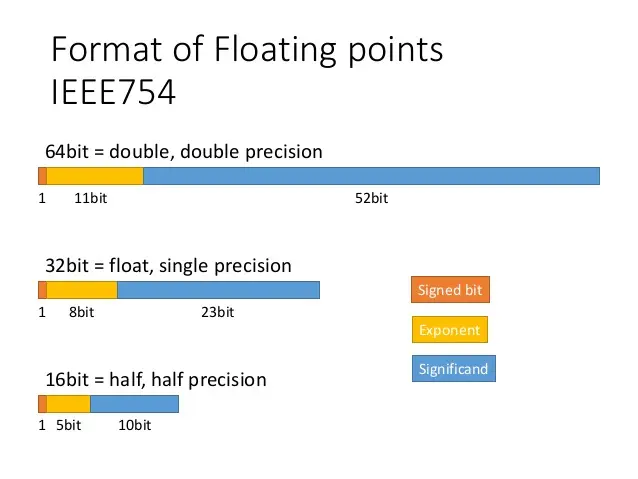

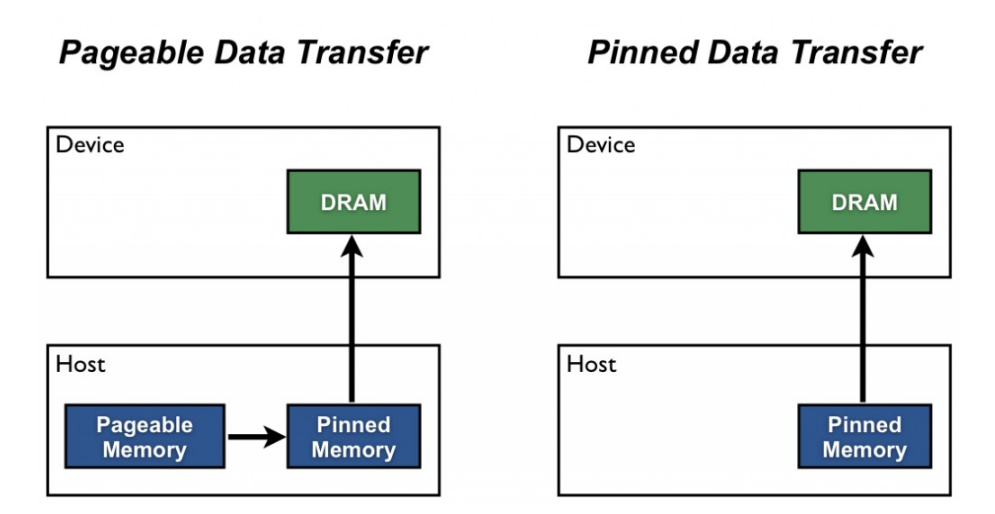

4. Memory and Compute Optimizations - Generative AI on AWS [Book]

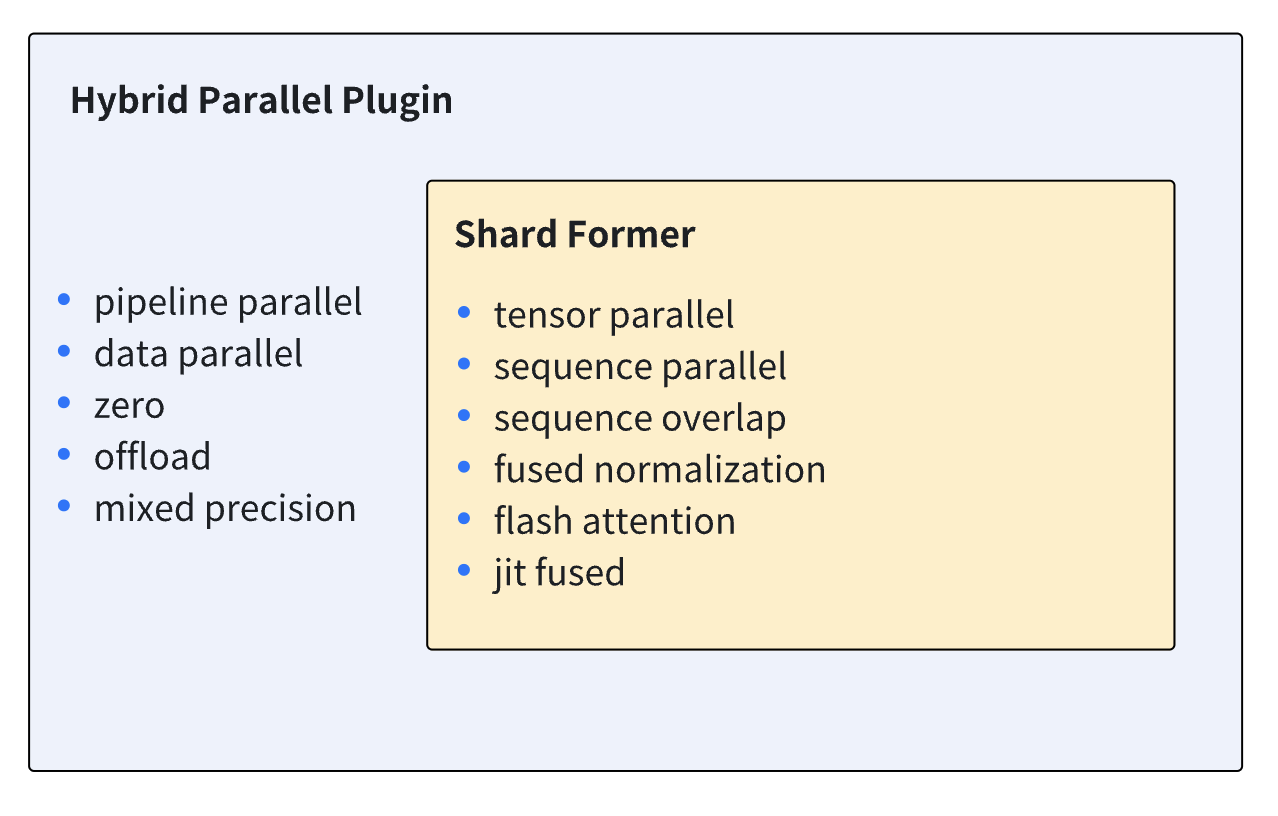

Booster Plugins

Optimizing model performance, Cibin John Joseph

Support DistributedDataParallel and DataParallel, and publish Python package · Issue #30 · InterDigitalInc/CompressAI · GitHub

torch.nn — PyTorch master documentation

Buy Wholesale China Da4028vh12b 40x40x28mm-dtype Dc Brushless Fan 12v 30000rpm & Dc Fan at USD 8.2

pandas - Using Simple imputer replace NaN values with mean error - Data Science Stack Exchange

Dtydtpe Clearance Sales, Bras for Women, 2Pc Womens Cross Back

dtydtpe bras for women women's adjustable sports front closure