GitHub - bytedance/effective_transformer: Running BERT without Padding

4.6 (661) In stock

Running BERT without Padding. Contribute to bytedance/effective_transformer development by creating an account on GitHub.

bert-base-uncased have weird result on Squad 2.0 · Issue #2672 · huggingface/transformers · GitHub

inconsistent BertTokenizer and BertTokenizerFast · Issue #14844 · huggingface/transformers · GitHub

Want to use bert-base-uncased model without internet connection · Issue #11871 · huggingface/transformers · GitHub

PDF) Packing: Towards 2x NLP BERT Acceleration

How to Train BERT from Scratch using Transformers in Python - The Python Code

transformer · GitHub Topics · GitHub

2211.05102] 1 Introduction

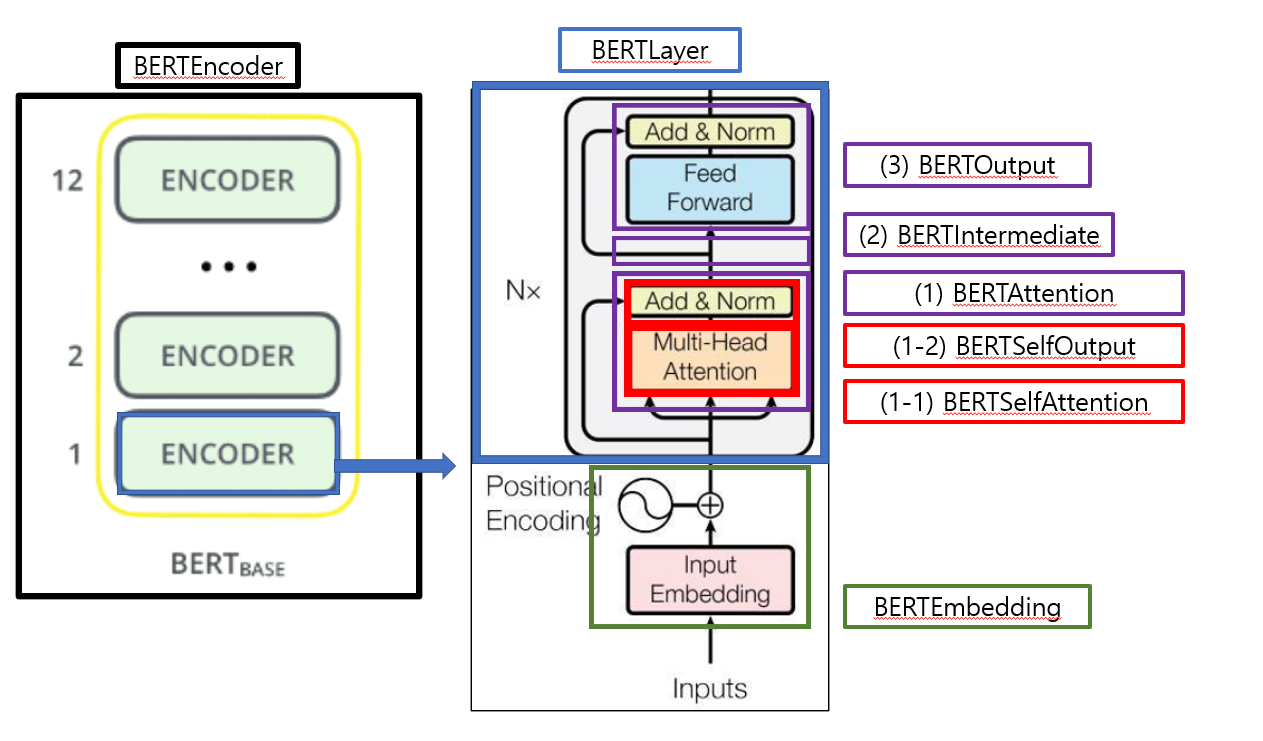

code review 1) BERT - AAA (All About AI)

Use Bert model without pretrained weights · Issue #11047 · huggingface/transformers · GitHub

Structure Size Calculation: With and Without Padding

Illustration of 1D convolution with (bottom) and without (top

spacing - \ulcorner without padding to letter - TeX - LaTeX Stack

Journey with Carpet Sans Padding: A Personal Story - Carpet Cleaning Force

Make Your Own Neural Network: Calculating the Output Size of Convolutions and Transpose Convolutions

CAUDICIFORM Nolina beldingii

CAUDICIFORM Nolina beldingii- Women's Full Length Softball Pant - Mizuno USA

Art 4 Kids Peek-A-Boo Zebra Wall Art FREE SHIPPING

Art 4 Kids Peek-A-Boo Zebra Wall Art FREE SHIPPING Gossard, Intimates & Sleepwear, Maroon Gossard 32g Bra

Gossard, Intimates & Sleepwear, Maroon Gossard 32g Bra Prima Donna Perle Seamless Plunge Underwire #0162344,38E,Caffe Latte : PrimaDonna: : Clothing, Shoes & Accessories

Prima Donna Perle Seamless Plunge Underwire #0162344,38E,Caffe Latte : PrimaDonna: : Clothing, Shoes & Accessories Chocolate Brown High Waist Fleece Lined Leggings Clothes design, Boutique pants, Leggings are not pants

Chocolate Brown High Waist Fleece Lined Leggings Clothes design, Boutique pants, Leggings are not pants