DistributedDataParallel non-floating point dtype parameter with requires_grad=False · Issue #32018 · pytorch/pytorch · GitHub

4.6 (607) In stock

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

PDF] PyTorch distributed

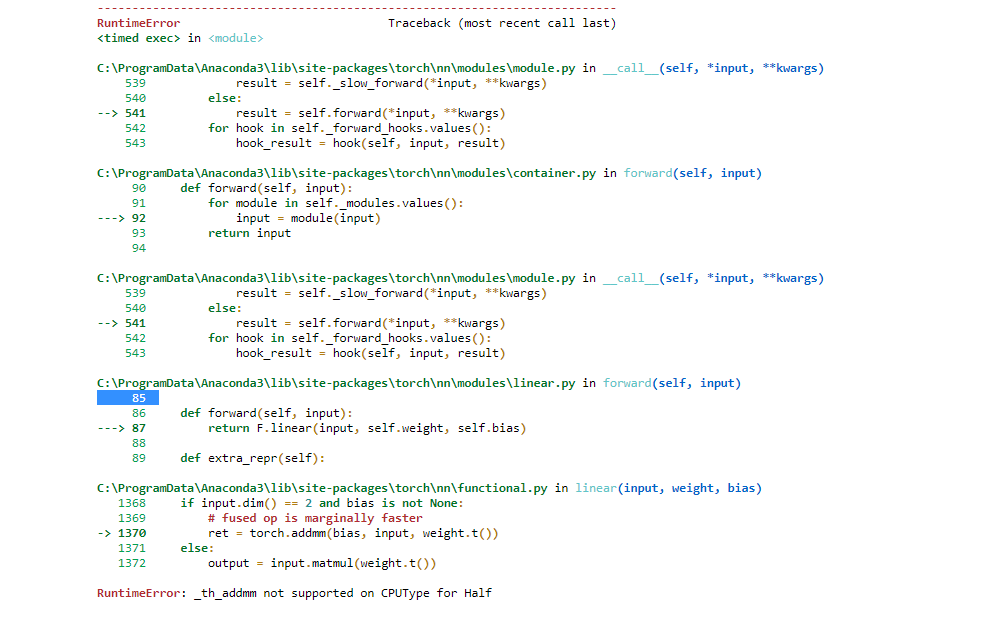

Training on 16bit floating point - PyTorch Forums

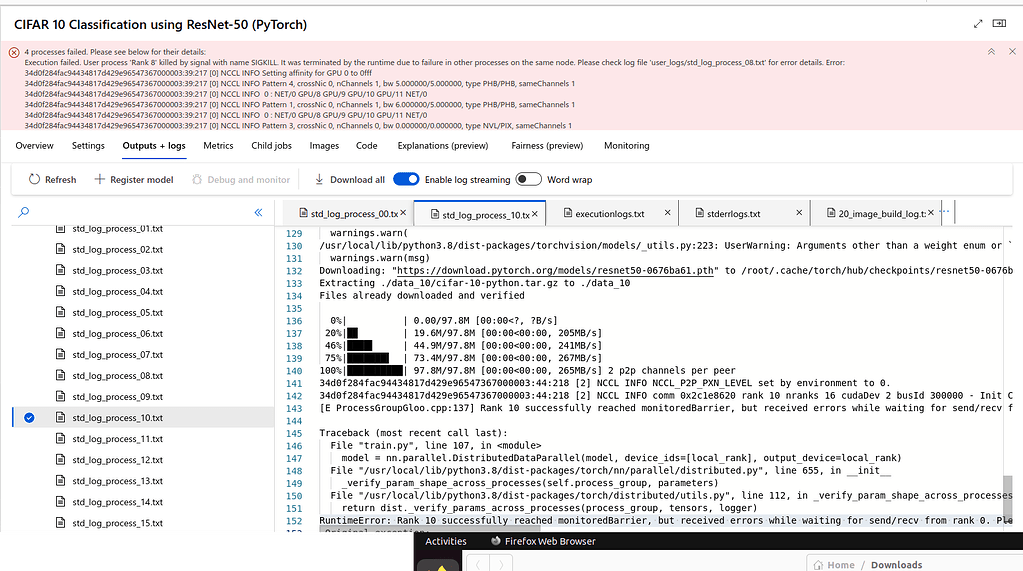

PyTorch DDP -- RuntimeError: Rank 10 successfully reached

Introducing Distributed Data Parallel support on PyTorch Windows

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

RuntimeError: DistributedDataParallel is not needed · Issue #225

小心!pytorch和numpy 中与数据类型有关的错误Expected object of

Distributed data parallel and distributed model parallel in

DistributedDataParallel non-floating point dtype parameter with

Issue for DataParallel · Issue #8637 · pytorch/pytorch · GitHub

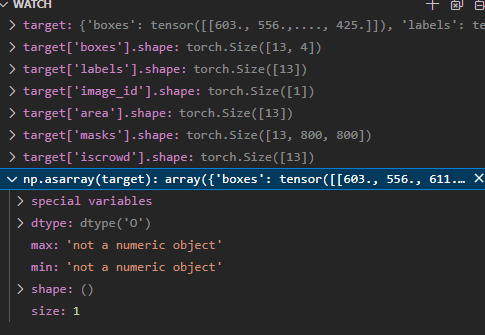

TypeError: can't convert np.ndarray of type numpy.object_. The

Rethinking PyTorch Fully Sharded Data Parallel (FSDP) from First

torch.distributed.barrier Bug with pytorch 2.0 and Backend=NCCL

8 Useful Pandas Features for Data-Set Handling, by Stephen Fordham

Getting Started with Machine Learning Using TensorFlow and Keras

0. Load Packages We will be working with the numpy

Dtydtpe Bras for Women, Ultra-Thin Underwear Bra Adjustable Bra